From Vibe Coding to Vibe Spec'ing: What I Got Wrong

After sharing my experience with Spec-Driven Development and Claude Code, I received some valuable feedback that highlighted a crucial blind spot in my approach. It turns out the problem wasn't with my original specifications; it was that I hadn't properly reviewed the specs that Claude Code and Tessl had generated.

The Real Issue

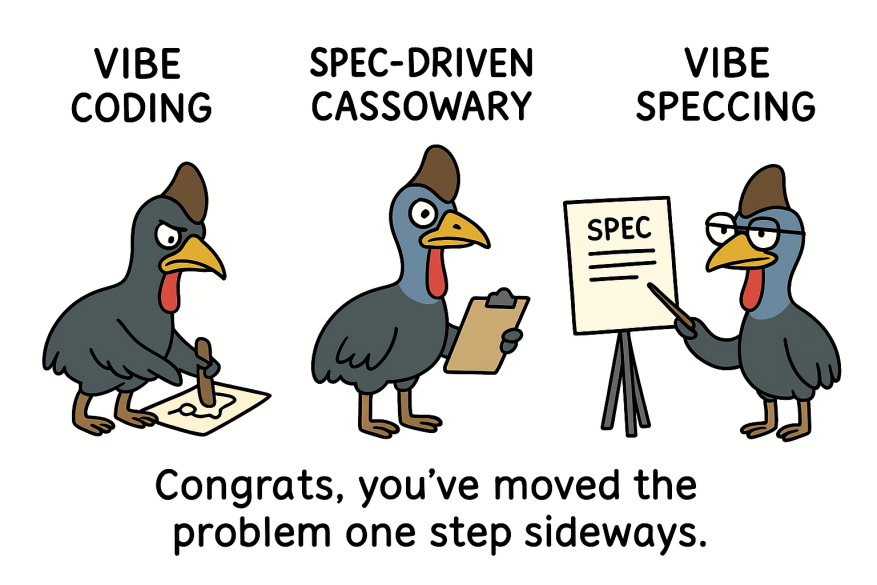

The feedback pointed out something I'd missed entirely: whilst I'd moved away from "vibe coding" (throwing vague prompts at AI and hoping for the best), I'd fallen into a different trap—trusting generated specifications without proper review.

The core insight: making the spec the most important artifact in the workflow means you actually have to read and validate what gets generated, not just assume it's correct.

What I Actually Did Wrong

Looking back, my original README and requirements were fine. The problem was that I didn't thoroughly read the specifications that Claude Code and Tessl created from them.

The first red flag should have been this capability specification:

### Send prompt to Gemini LLM

Sends the provided prompt to the specified Gemini model using LangChain and returns the response.

- Mocked Gemini returns `"mocked response"`: prints `"mocked response"` and completes normally [@test](../tests/test_send_prompt_success.py)

I should have spotted immediately that this was describing a meaningless test. After I reprimanded Claude about the usefulness of this test, it generated a much better capability specification:

### Send prompt to Gemini LLM

Sends the provided prompt to the specified Gemini model using LangChain and returns the response.

- Creates ChatGoogleGenerativeAI instance with correct model and API key, returns response content [@test](../tests/test_send_prompt_integration.py)

- Handles LangChain API exceptions by re-raising them [@test](../tests/test_send_prompt_langchain_error.py)

- Processes empty prompt through LangChain correctly [@test](../tests/test_send_prompt_empty_input.py)

The second issue I missed was even more concerning. The specification included this capability:

### Load model configuration

Loads the model name from environment variables with fallback to default.

- Returns "gemini-1.5-pro" when `DEFAULT_MODEL` is not set in environment [@test](../tests/test_load_model_default.py)

- Returns custom model when `DEFAULT_MODEL` is set in environment [@test](../tests/test_load_model_custom.py)

If you look at the actual spec file, you'll see that test_load_model_custom.py was never actually created. I trusted that Claude Code would honour each capability and test, but clearly that's not something I can take for granted.

Claude Code did eventually realise it was missing this test when I later prompted about useless tests, but it didn't admit to having missed it initially.

The Mental Adjustment

The feedback highlighted something I'd underestimated: trusting generated specifications without proper validation is just as dangerous as trusting generated code without review.

The workflow isn't just about having AI generate specs—it's about becoming a more careful reviewer of those specs. AI tools can write specifications that look professional but contain fundamental flaws or omissions.

A Better Approach

Rather than treating generated specs as authoritative, they need the same scrutiny as generated code:

Validate test descriptions: Check that each test specification actually describes meaningful verification, not just mock verification.

Cross-reference outputs: Ensure that promised test files actually exist and implement what the spec claims.

Question circular logic: If a test specification sounds like it's only testing that mocking works, it probably is.

The Real Learning

The original issue wasn't about my requirements being too vague—it was about not being sceptical enough of generated artifacts. AI-assisted development requires active verification at every step, including (especially) the specifications that drive everything else.

This workflow works, but only if you read what it generates as carefully as you'd review a junior developer's work. That includes the specs, not just the code.

Thanks to the AI Native Dev Discord community for the feedback that prompted this reflection.

10:34 AM #tessl #claude-code #spec-driven-development in posts